Title |

DADABOT interview |

Author |

|

Date |

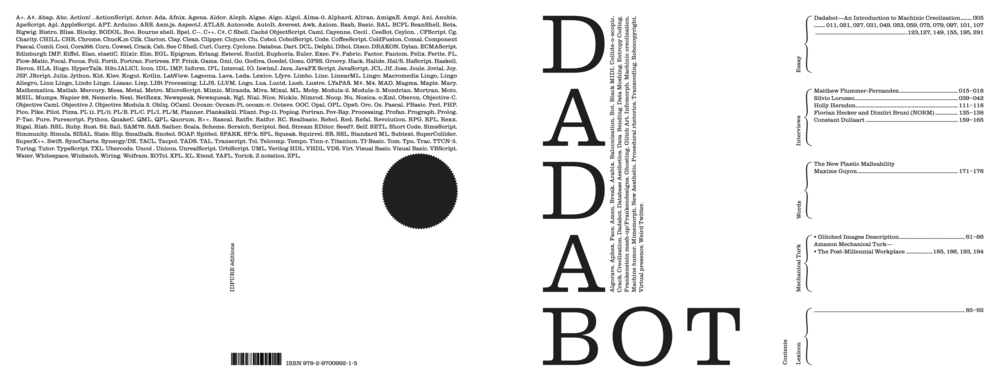

[Interview by Nicolas Nova published in Dadabot – Essay about the hybridization of cultural forms (music, visual arts, literature) produced by digital technologies (Nicolas Nova and Joel Vacheron eds). More info about the publication here.]

Nicolas Nova: Can you start with a short description about who you are and how you became interested in new forms of publications?

Silvio Lorusso: I’m an artist and designer currently based in Milan, Italy. My ongoing PhD research in Design Sciences at Iuav University of Venice is focused on experimental publishing which is informed, directly and indirectly, by digital technology.

I became interested in new forms of publications while I was doing an internship at the Institute of Network Cultures, an Amsterdam-based research centre founded and directed by media theorist Geert Lovink. I was asked to investigate different publishing channels and formats, such as Print On Demand and EPUB. I also interviewed several Dutch art and design publishers in order to understand the way in which they approached these emerging possibilities. Back then, I perceived a mix of curiosity and anxiety.

At the beginning, my experiments were quite frustrating, as EPUB seemed to me pretty limited from a design perspective. Similarly, POD’s quality was – and often still is – quite poor in terms of book’s materiality. It took me some time to develop a specific focus on what can be loosely defined “post-digital publishing”; such focus is highly influenced by the work of, among others, Alessandro Ludovico and Florian Cramer.

NN: More specifically, a big chunk of your work is devoted to algorithmic production of content. What’s exciting here? What are the best examples you saw recently? Why?

SL: I can identify three aspects that are relevant to me, each of which is “human-centered”, so to speak. The first one has to do with the relationship between algorithms and human labor. In many of the softwares we use on a daily basis, the machine part is only the tip of the iceberg. Consider Google Translate: it works only because it can harvest a huge quantity of documents produced by human beings. Human labor is increasingly hidden and presented as pure AI: the motto of Mechanical Turk, Amazon’s cheap labor platform, is “artificial artificial intelligence”. I think it’s important to develop strategies that highlight the human role. A good example in this sense is reCAPCHAT, a web-based piece by Jimpunk, in which users are asked to fill in a CAPTCHA, which is in turn anonymously tweeted by a dedicated account.

The second aspect can be summed up by saying that “reading is writing”. It has to do with the new forms of writing that are made explicit by computers’ tracking systems. Aside from generating novel textual material, these systems deeply affect the way in which content is produced. This is already happening in platforms such as Oyster and Scribd, where reading habits might soon determine the value of texts. We need to look at the feedback loops between reading and writing in order to have a broad understanding of the lifecycle of content. In relation to this, I think of Evan Roth’s Internet Cache Self Portrait series: big prints made out of the images automatically stored in the artist’s browser cache. “The new memoir is our browser history” according to uncreative writing guru Kenneth Goldsmith.

Finally, I think it’s fascinating to look at the human reaction to algorithmic reading and writing processes. Nowadays, it’s not uncommon to stumble upon bots talking to each other on Twitter, sometimes without even noticing. SEO has a profound impact on contemporary writing practices. The question is: to what extent do we adapt to these systems? According to Jaron Lanier, the renowned Turing test, more than showing whether a machine is able to think like a human being, manifests how humans lower themselves to a level that is accepted by machines. In her Spam Bibliography, writer Angela Genusa reorganises the spam she received via email in the form of an academic bibliography, somehow validating automatically generated texts that are commonly considered garbage.

NN: Can you describe 2 of your recent projects?

SL: I’d like to mention two projects that are connected to the points made above. The first one is a collaboration with German artist Sebastian Schmieg and Amazon Kindle users. Networked Optimization is a series of three crowdsourced versions of popular self-help books. Each book contains the full text, which is however invisible because it is set in white on a white background. The only text that remains readable consists of the so-called “popular highlights” – the passages that were underlined by many Kindle users – together with the amount of highlighters. Each time a passage is underlined, it is automatically stored in Amazon’s data centers. The project puts in place a process of recursive optimization, in which self-help books, which are generally meant to “optimize” certain aspects of behavior, are in turn optimized by the readers themselves. While the coincidence of reading and writing is shown in its “natural“ context of literature, the quantification characterizing popular highlights affects the value that readers attribute to a certain passage.

The second project is entitled Douglas Rushkoff’s New Book. Douglas Rushkoff, author of Program or Be Programmed, quit Facebook in 2013 because “it does things on our behalf when we’re not even there. It actively misrepresents us to our friends, and worse misrepresents those who have befriended us to still others.” Using MySocialBook – an online platform that allows to print books from personal Facebook profiles, the ones of friends, and pages – I made a book out of the fan page that Rushkoff abandoned in 2013, selecting the period of time in which he was actively using it. The book’s layout is automatically determined by Facebook’s metadata; for instance the most popular photo – paradoxically the one in which Rushkoff announces that he’s leaving the social network – occupies the first page, together with the amount of likes it received.

NN: Most of the projects you conduct/you’re interested in via P–DPA are quite experimental. I’m definitely fascinated by them and I found them utterly intriguing. However, when working with friends and clients on the future of publishing, I find it hard for them to understand what it means and how they anticipate the evolution of publications. What’s your take on this? How do you think examples such as the one discussed in the second question pave the way for the future of publishing?

SL: I think that the reason why it’s difficult to relate these experimental works to the publishing industry is exactly because they push the boundaries of what we generally mean by “publishing”. The examples discussed in the second question provide a radically inclusive, holistic notion of publishing, not easy to adopt for commercial purposes, but nonetheless part of our networked lives. The projects I conduct and the ones I’m interested in show at least that digital publishing is not just about rich media, slick interfaces and smooth gestures. Hoping not to sound reactionary, I believe that traditional formats like the printed book can be the appropriate output for genuinely digital practices. After all, Print On Demand could not exist without Web 2.0, PDF, etc.

That said, some connections between experimental practices and publishing as an industry do exist. Uncreative writing is going mainstream: a few months ago a book by artist Cory Arcangel, including a curated collection of tweets containing the phrase “working on my novel”, was published by Penguin. The field of spam books is growing day by day: these algorithmic assemblages of open access materials, such as Wikipedia articles, are generated in bulk and actually sold by ad hoc publishing houses. Economist Phillip M. Parker, who in 2008 had already published more than 200.000 books, even patented a way to produce them.